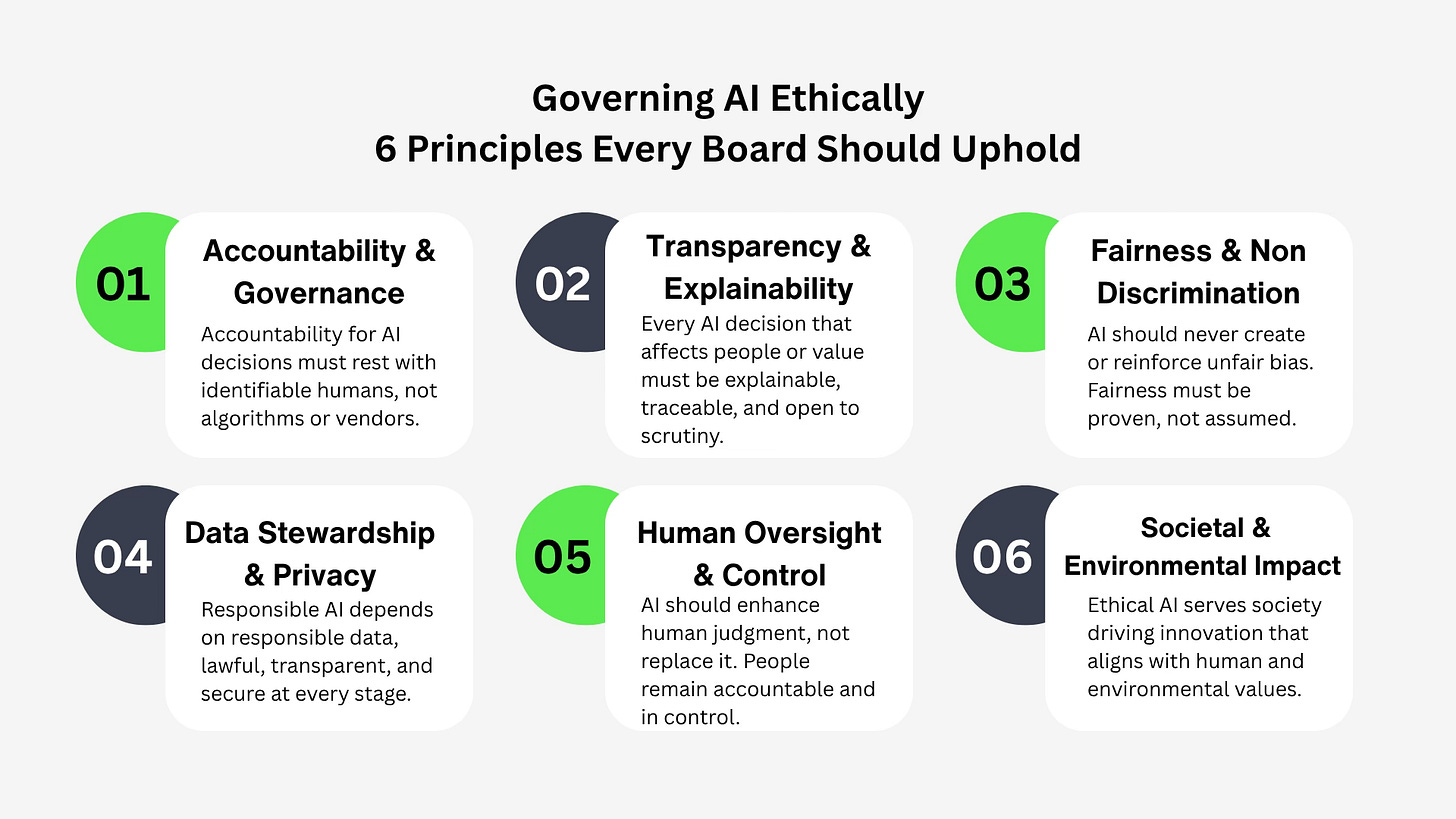

Governing AI Ethically

6 Principles Every Board Should Uphold

As boards move into AI implementation they need to be clear about the ethical considerations of AI usage.

Artificial Intelligence is reshaping business models, redefining work, and reframing trust. But as adoption accelerates, so too do the ethical and governance challenges that accompany it.

With my recent work across sectors one of the key questions we have been asking is

“How can we harness AI’s potential without losing sight of our values, responsibilities, and public trust?”

The Promise and the Problem

AI can help organisation’s improve both its relationship with the customer and the people that are working toward that value through the creation of personalised customer experiences and predictive insights to automation that frees people for higher-value work. BUT this also opens up new forms of risk.

Poorly governed AI can:

Amplify bias and discrimination in recruitment, lending, or pricing.

Erode privacy through opaque data practices and uncontrolled model training.

Undermine accountability when algorithms make, or appear to make, decisions once owned by humans.

Damage trust with regulators, customers, and employees when outcomes cannot be explained or justified.

These are not theoretical concerns. Regulators are watching. Public sentiment is shifting. The EU AI Act (coming into effect in 2026) will set a new global benchmark for AI safety, transparency, and accountability. The UK, while taking a more flexible “pro-innovation” approach, is nonetheless currently aligning around the same core ethical principles, fairness, transparency, accountability, and human oversight.

Why Ethics Must Lead, Not Follow

We need to be clear about our ethical lines and sustain them responsibly.

Ethical governance gives organisations a licence to operate, and to innovate, without crossing legal, reputational, or societal boundaries and opening up the organisation to serious risk.

When boards consider AI strategy, the ethical questions should not be abstract, they should be deeply practical.

Who is accountable if an AI system discriminates or makes an error?

How transparent are our models, and can we explain their decisions in plain language?

Do our AI systems reflect our values and ESG commitments?

Are we prepared for the regulatory scrutiny that’s coming?

Embed ethics early to a void the cost of ethical failure later: regulatory fines, brand damage, and loss of trust that takes years to rebuild.

Six Principles for Ethical AI Governance

At board level, six guiding principles provide a foundation for responsible AI:

Accountability and Governance: Accountability for AI decisions must rest with identifiable humans, not algorithms or vendors.

Transparency and Explainability: Every AI decision that affects people or value must be explainable, traceable, and open to scrutiny.

Fairness and Non-Discrimination: AI should never create or reinforce unfair bias. Fairness must be proven, not assumed.

Data Stewardship and Privacy: Responsible AI depends on responsible data, lawful, transparent, and secure at every stage.

Human Oversight and Control: AI should enhance human judgment, not replace it. People remain accountable and in control.

Societal and Environmental Impact: Ethical AI serves society driving innovation that aligns with human and environmental values.

These principles are the foundation for trustworthy innovation.

A Call to Boards and Leaders

The governance of AI will define corporate integrity in the next decade much as financial governance did in the last.

Boards must now:

Ensure AI ethics sits within their risk and governance frameworks.

Demand transparency, auditability, and oversight mechanisms for all AI systems.

Align AI use with organisational purpose, ESG priorities, and stakeholder trust.

Ethical AI is a strategic investment in long-term value and trust.

If you found this useful, subscribe for insights on AI governance, transformation, and the intersection of ethics and innovation.